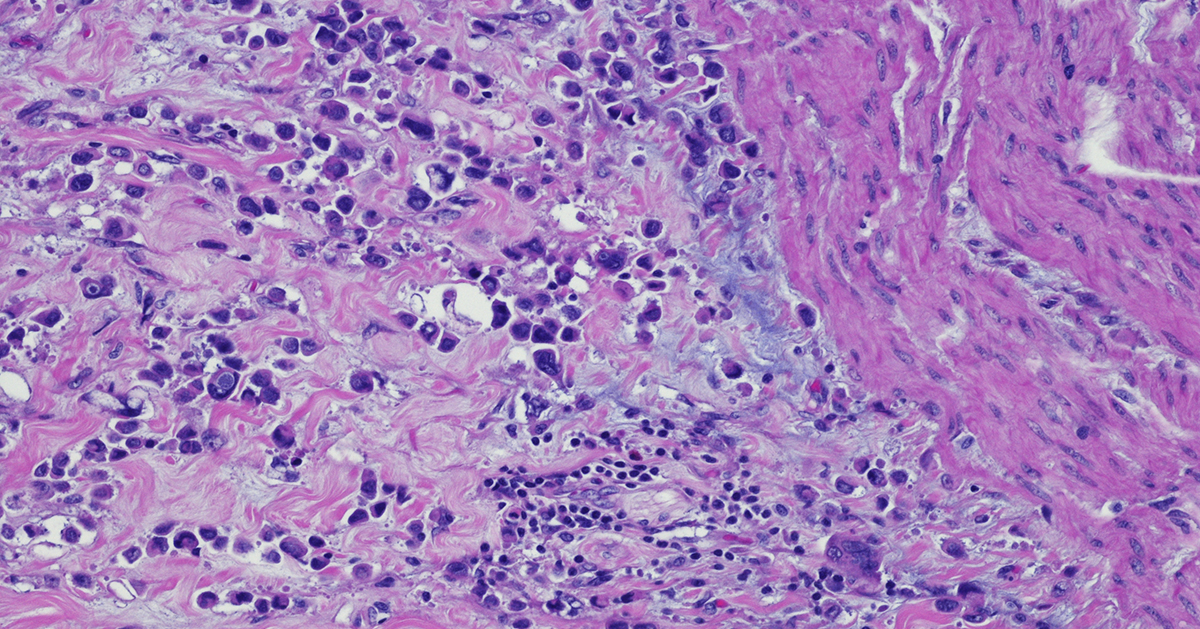

High dimensional omics datasets often include many features that contribute little to downstream analysis. This can blur structure in unsupervised tasks, slow computation, and complicate model training. The MADVAR study introduces two simple, data driven procedures that set feature selection thresholds from the distribution of the data itself, rather than relying on fixed heuristics. The first procedure, madvar, computes a variance cutoff using the median plus a multiple of the median absolute deviation. The second, intersect Distributions, fits a two component Gaussian mixture to the variance or another continuous score, and uses the intersection point between components as the cutoff. Both methods are implemented in an R package and were evaluated across public datasets that include TCGA gene expression, GTEx proteomics, and CPTAC phosphoproteomics. The paper reports improvements in unsupervised clustering quality and competitive supervised performance with fewer features, while keeping runtime and memory use modest.

What the Paper Tested

The benchmarking examined unsupervised structure and supervised classification. For unsupervised analysis, the authors applied filtering and then assessed cluster quality with connectivity, the Dunn index, and the Biological Homogeneity Index. Across datasets, the variance based approaches produced tighter or more homogeneous clusters on these metrics. For supervised analysis, they trained random forest models with repeated runs. Both approaches produced low out of bag error rates. Retaining more features sometimes improved accuracy, but MADVAR often matched the mixture based approach while selecting fewer features. The paper also documents practical defaults, such as Ward.D for hierarchical clustering with Euclidean distance, and explains how to pass either a raw matrix or a precomputed variance vector into the functions. Source code and documentation are available on GitHub.

When These Methods are a Good Fit

These procedures are particularly well suited for rapid, large-scale preprocessing, when analyses require a quick, efficient, and transparent approach to feature selection prior to dimensionality reduction, clustering, or model fitting. They also integrate naturally into interpretability-oriented pipelines, since thresholds based on medians or mixture-model intersections are simple to explain and justify to collaborators. Because the logic operates on continuous feature scores, the same framework can be applied seamlessly to any quantitative data type that can be summarized by variability Things to keep in mind.

Variance is a proxy for informativeness, not a guarantee. Low variance does not always mean a feature is uninformative. Some biomarkers remain stable yet become predictive in combination with others. If domain knowledge indicates a feature should be preserved, the package allows must keep lists. The mixture based method assumes the variance distribution resembles a two-component mixture. If the fit is poor, the intersection may not be meaningful, so density plots are worth inspecting before adopting the cutoff. Downstream metric choice also matters. Gains in the Biological Homogeneity Index or Dunn index describe cluster characteristics, which may not translate to improvements on other endpoints such as survival modeling or dose response prediction. Finally, supervised performance can depend on class imbalance and sample size. If your data are skewed, tune the learner and validate with a scheme that reflects your use case.

How to Apply, a Straightforward Workflow

A practical workflow starts by exploring the variance distribution. Plot the density (using the madvar flag `plot_density = TRUE`), confirm whether there is a near zero peak, and decide whether a MAD based threshold or a two-component mixture are appropriate. Set a conservative first pass using the default MAD multiplier (`mads = 2`) and adjust if another stringency level is preferred, or, if you prefer the mixture approach, verify the intersection visually before you commit to the cutoff. Preserve domain critical features by whitelisting known markers or controls that should not be dropped. Re run the planned clustering or model fitting, compare structure and error rates before and after filtering, and record any change in feature counts and compute time so the impact is transparent to collaborators.

Reproducibility and availability

The R implementation and documentation are available on GitHub, as referenced in the paper. The evaluations draw on TCGA gene expression, GTEx proteomics, and CPTAC phosphoproteomics, with figures that show density plots, clustering metrics, and classification results. The article appears as an Application Note in Bioinformatics Advances and is available for open access.

Summary

MADVAR provides two transparent, variance-based rules for feature selection, enabling the removal of near invariant features from large omics matrices. In the reported benchmarks, these procedures improved or maintained clustering quality and supervised accuracy while substantially reducing feature counts and computational load. The approach is easy to inspect, easy to explain, and simple to integrate into existing R workflows. As with any filter, final value depends on the analysis goal, so it is worth validating its effects on the endpoints that matter for your study.

Silberberg G. “MADVAR, a lightweight, data driven tool for automated feature selection in omics data.” Bioinformatics Advances 2025, vbaf211. doi, 10.1093/bioadv/vbaf211.